The Evolution of Isolation AI

A New Data Paradigm

We’ve learned a lot of lessons from the rise of social media which can be applied to AI.

Social media introduced a new path for human relationships through leveraging digital versions of real life.

Heavily oversimplified, it looks like this:

It was unprecedented. As a society, we still have yet to appropriately adapt. (Anton Barba-Kay’s book on this is valuable.)

Somewhere along the way (I’m not too concerned about date precision), algorithms introduced a more streamlined way to view data. In 2012, Facebook made a major shift in it’s News Feed toward a “Timeline” that was fully algorithmic. You didn’t see things chronologically anymore. You saw what the algorithm wanted you to see. That algorithm was told what to do by people who worked at Facebook and the users who told Facebook what they liked.

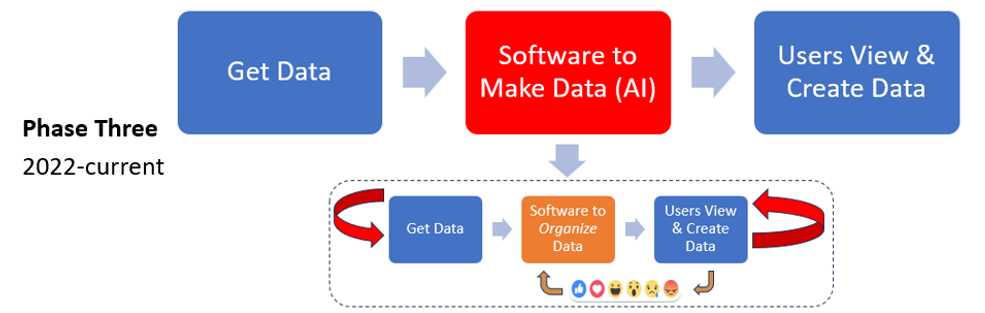

It started to build a cycle of feedback, which looked like this:

Algorithms now separated people’s digital pages from one another. While all algorithms are not AIs, there’s enough of an overlap here to not get too bogged down in the definitions. On Twitter, Threads, and Bluesky, there is still a way to view content chronologically and without the mediation of AI, but such use is rare.

The feedback loop was given steroids in the form of advanced AI (this is where Machine Learning and Deep Learning took things to a new level). Chatbots like ChatGPT came onto the scene with a novel approach: instead of merely showing data or organizing data, they used the feedback loops inherent in the internet to make data.

In the early days of social media, Christians would try to consider, “Is Facebook safe for my daughter?” Most thought it was fine (or didn’t think about it at all) because it depended on who your daughter became friends with. Now, it’s much more difficult. Not only is your daughter’s content filtered through algorithms (not to mention the rise of online ads and p*rn bots), but across all internet platforms, AI is making data to addict teens to their platforms.

Social media was once a gateway to other people. Now, with AI, social media is primarily a gateway to the internet. We ask “Is ChatGPT safe for my daughter?” but we don’t know how to answer because we don’t know what data OpenAI will send to her. In fact, OpenAI may change their algorithms over the weekend and then apologize for how it behaves. This just happened. OpenAI continues to “move fast and break things” for the sake of learning.

Here’s Sam Altman, CEO and semi-cult-leader of OpenAI, apologizing on Twitter/X:

No Christian book on AI is going to teach you how to navigate all this. AI developments are impossible to predict. Impossible to neatly categorize. Things are moving too fast. But here’s a few ways I think about AI.

Artificial Intelligence (AI) is a broad term that includes predictive AI (like text messaging suggestions, sales forecasting, traffic or weather predictions), generative AI (such as ChatGPT and social media chatbots), and multimodal AI (like Siri or Google Gemini). It’s an umbrella term, for better and worse. It can be narrow (when it accomplishes only one task) or general (when it can accomplish many tasks). One day it will appear to be more intelligent on most knowledge questions than most humans, perhaps in the next 1-3 years. Some will worship it.

Generative AI, in its current form, is biased and unpredictable. Sam Altman (co-founder of OpenAI) talks openly about how AI must have a "worldview" to draw on, since the training of AI must be done with human-created goals.

Generative AI is genuinely helpful. It is hard to appreciate just how valuable tools like Claude or ChatGPT are without personal experience. Here are a list of things I use it for that I find beneficial: travel planning, feedback on finished and already written articles, brainstorming, advanced thesaurus, alliteration, advanced dictionary (what does this complex set of words in this book mean?), finding Bible verses, telling me what people generally think about things, and more. For ethical reasons, I prefer Claude over ChatGPT.

AI is not a fad. It is changing how we work. It is changing how we live. One in five people use GenAI at least weekly.

Consider the work of HBR.org writer Marc Zao-Sanders. He tallied up tens of thousands of internet comments to determine how people are using GenAI. He did this in 2024 and again in 2025.

Take some time to swallow these changes:

Did you notice the top three ways AI is being used? Number one is therapy and companionship. (Third is finding purpose… though I could not find what is meant by this).

GenAI is a friend-replacer. It’s a pastor-replacer. At least in many ways. Snapchat has built AI into their app, and it cannot be removed by parental oversight technology. (By the way, Snapchat is bad for teens regardless of AI). People are copying and pasting conversations into AI to figure out how to respond to their boyfriend or friends or whatever.

AI is made to be like humans because that's the nature of the human heart. When people make an idol, they don't turn a stone into something that looks like a rock. They make it look like an animal, a human, or a god. Why? To entice the human heart toward it. To build allegiance. And allegiance = money.

Whereas social media was an app for “connection,” (which it failed at doing) AI is explicitly an isolation app. You do not connect with others through it. It is private. Solo. Alone.

It may be helpful to give some practical examples of what I do not believe AI should be used for. I do not use GenAI to: journal, think for me, write sermons, tell me how to parent, tell me what is right and wrong, or give me knowledge expertise. As Kevin DeYoung recently said, AI can be great to retrieve knowledge, but do not use it to create knowledge.

Much more could be said. At minimum, we must recognize three truths:

AI is accelerating the problems started by social media.

AI is creating new problems.

AI should not be given to children or teens. As Brad Littlejohn argues, this kind of dangerous technology should be regulated in the same way we do cars.

We must use wisdom in our use (and potential abstinence) of these platforms. Some people should stay off of it completely. Maybe you should. Maybe you shouldn’t. Ask God. Ask your pastor. Ask your mom. Ask your small group. Don’t ask ChatGPT.

Today I am speaking at a church leadership conference on AI. Here is a link to a PDF handout that summarizes and outlines my workshop.

Recent podcasts I’ve produced:

Thinking About The Faith (Links: Website | Apple | Spotify):

Q13: Can Anyone Keep the Law of God Perfectly?

Q14: Did God Create Us Unable to Keep His Law?

Q15: Since No One Can Keep the Law, What Is Its Purpose?

Q16: What is Sin?

Q17: What is Idolatry?

What Would Jesus Tech (Links: Website | Apple | Spotify | YouTube):

Multiplying the Word: Creating Local Digital Content as a Church, with Pastor Luke Simmons

Choosing and Using GenAI as Christian

Multiplying the Word: Steward Your Story, with Ian Harbor

The Tech-Savvy Christian Faith | Re: Wes Huff's History of Technology & Christianity

Thanks for reading (and listening!).